Using a Windows 10 Device as a Proxmox Corosync QDevice

For my inaugural post I'm going to describe the process I followed for getting my Windows 10 file server with a presence on the Proxmox PVE cluster network to function as a Corosync QDevice / witness. Guides will generally tell you to use a small device like a Raspberry Pi for this purpose but in my case the Windows 10 device already had a relationship with the PVE cluster and so I didn't see the purpose in purchasing, configuring and maintaining another device to function solely as a witness. The Windows 10 device functions as network storage for backups and ISOs so its participation in the setup is just as important to me as any of the nodes.

There is no Windows Corosync QDevice binary available. To configure the QDevice I installed and configured Windows Subsystem for Linux. You can read more about it from microsoft here --> https://learn.microsoft.com/en-us/windows/wsl/about.

The first step in the process is to install WSL2.

https://learn.microsoft.com/en-us/windows/wsl/install

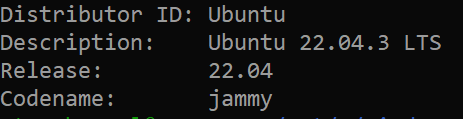

I just followed the default installation configuration. Here's what I ended up with in the WSL2 deployment:

With WSL2 available, I installed the Corosync QDevice package. At the WSL2 shell, type the following:

sudo apt install corosync-qnetd

The install process output should look something like this:

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

0 upgraded, 1 newly installed, 0 reinstalled, 0 to remove and 68 not

upgraded.

Need to get 63.6 kB of archives.

After this operation, 0 B of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu jammy/universe amd64 corosync-qnetd

amd64 3.0.1-1 [63.6 kB]

Fetched 63.6 kB in 1s (88.4 kB/s)

(Reading database ... 27255 files and directories currently installed.)

Preparing to unpack .../corosync-qnetd_3.0.1-1_amd64.deb ...

Unpacking corosync-qnetd (3.0.1-1) over (3.0.1-1) ...

Setting up corosync-qnetd (3.0.1-1) ...

Processing triggers for man-db (2.10.2-1) ...

Once complete, the QDevice is available.

Notably the WSL2 install process creates a NIC (Hyper-V Virtual Network Adapter) which bridges communication between the host and WSL2. This is important because -- at least for me -- I had to set up a script that I run every time the Windows 10 computer boots which sets up a port proxy between the physical NIC the PVE cluster traffic is on and the WSL2 vNIC. I haven't found a way to make the configuration change persistent, so each time the computer boots I simply run the script with administrator elevation. There are a few things I have to do with that device each time it boots so for me running the script is trivial. It may be worthwhile for you to set up a scheduled task. Here are the 4 commands the script runs in order to facilitate communication between the PVE cluster nodes and the WSL-hosted QDevice:

netsh interface portproxy delete v4tov4 listenport=22

listenaddress=192.168.10.10

netsh interface portproxy delete v4tov4 listenport=5403

listenaddress=192.168.10.10

netsh interface portproxy add v4tov4 listenport=22

listenaddress=192.168.10.10 connectport=22

connectaddress=172.19.17.245

netsh interface portproxy add v4tov4 listenport=5403

listenaddress=192.168.10.10 connectport=5403

connectaddress=172.19.17.245

You will have to adjust these to fit your needs. "listenaddress" is the address of the physical NIC for the Windows machine on the cluster network. "connectaddress" is the address of the vNIC created for WSL2. These commands bridge those interfaces for these ports. The portproxy delete runs before the portproxy add because for some reason the portproxy rules still exist after a reboot but they are non-functional, so I simply reset them.

Now your Corosync QDevice should be ready. The next step, making it available to participate with the PVE cluster, is pretty straightforward. Open a shell to one of your PVE nodes and enter the following command, replacing with the physical link IP address for the Windows 10 device.

pvecm qdevice setup 192.168.10.10

If the process is successful the output should look something like this:

root@proxmox2:~# pvecm qdevice setup 192.168.10.10

/bin/ssh-copy-id: INFO: Source of key(s) to be installed:

"/root/.ssh/id_rsa.pub"

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to

filter out any that are already installed

INFO: initializing qnetd server

INFO: copying CA cert and initializing on all nodes

node 'proxmox': Creating /etc/corosync/qdevice/net/nssdb

password file contains no data

node 'proxmox': Creating new key and cert db

node 'proxmox': Creating new noise file

/etc/corosync/qdevice/net/nssdb/noise.txt

node 'proxmox': Importing CA

node 'proxmox2': Creating /etc/corosync/qdevice/net/nssdb

password file contains no data

node 'proxmox2': Creating new key and cert db

node 'proxmox2': Creating new noise file

/etc/corosync/qdevice/net/nssdb/noise.txt

node 'proxmox2': Importing CA

node 'proxmox3': Creating /etc/corosync/qdevice/net/nssdb

password file contains no data

node 'proxmox3': Creating new key and cert db

node 'proxmox3': Creating new noise file

/etc/corosync/qdevice/net/nssdb/noise.txt

node 'proxmox3': Importing CA

node 'proxmox4': Creating /etc/corosync/qdevice/net/nssdb

password file contains no data

node 'proxmox4': Creating new key and cert db

node 'proxmox4': Creating new noise file

/etc/corosync/qdevice/net/nssdb/noise.txt

node 'proxmox4': Importing CA

INFO: generating cert request

Creating new certificate request

Generating key. This may take a few moments...

Certificate request stored in /etc/corosync/qdevice/net/nssdb/qdevice-net-

node.crq

INFO: copying exported cert request to qnetd server

INFO: sign and export cluster cert

Signing cluster certificate

Certificate stored in /etc/corosync/qnetd/nssdb/cluster-PROXMOX-

CLUSTER.crt

INFO: copy exported CRT

INFO: import certificate

Importing signed cluster certificate

Notice: Trust flag u is set automatically if the private key is present.

pk12util: PKCS12 EXPORT SUCCESSFUL

Certificate stored in /etc/corosync/qdevice/net/nssdb/qdevice-net-node.p12

INFO: copy and import pk12 cert to all nodes

node 'proxmox': Importing cluster certificate and key

node 'proxmox': pk12util: PKCS12 IMPORT SUCCESSFUL

node 'proxmox2': Importing cluster certificate and key

node 'proxmox2': pk12util: PKCS12 IMPORT SUCCESSFUL

node 'proxmox3': Importing cluster certificate and key

node 'proxmox3': pk12util: PKCS12 IMPORT SUCCESSFUL

node 'proxmox4': Importing cluster certificate and key

node 'proxmox4': pk12util: PKCS12 IMPORT SUCCESSFUL

INFO: add QDevice to cluster configuration

INFO: start and enable corosync qdevice daemon on node 'proxmox'...

Synchronizing state of corosync-qdevice.service with SysV service script

with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable corosync-qdevice

Created symlink /etc/systemd/system/multi-user.target.wants/corosync-

qdevice.service -> /lib/systemd/system/corosync-qdevice.service.

INFO: start and enable corosync qdevice daemon on node 'proxmox2'...

Synchronizing state of corosync-qdevice.service with SysV service script

with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable corosync-qdevice

Created symlink /etc/systemd/system/multi-user.target.wants/corosync-

qdevice.service -> /lib/systemd/system/corosync-qdevice.service.

INFO: start and enable corosync qdevice daemon on node 'proxmox3'...

Synchronizing state of corosync-qdevice.service with SysV service script

with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable corosync-qdevice

Created symlink /etc/systemd/system/multi-user.target.wants/corosync-

qdevice.service -> /lib/systemd/system/corosync-qdevice.service.

INFO: start and enable corosync qdevice daemon on node 'proxmox4'...

Synchronizing state of corosync-qdevice.service with SysV service script

with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable corosync-qdevice

Created symlink /etc/systemd/system/multi-user.target.wants/corosync-

qdevice.service -> /lib/systemd/system/corosync-qdevice.service.

Reloading corosync.conf...

Done

root@proxmox2:~#

You can verify the process completed successfully by running the following command on a PVE cluster node:

pvecm status

If everything is ok you'll see the QDevice listed with votes = 1 and expected votes and total votes will match.

root@proxmox2:~# pvecm status

Cluster information

-------------------

Name: PROXMOX-CLUSTER

Config Version: 28

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon May 19 00:11:39 2025

Quorum provider: corosync_votequorum

Nodes: 4

Node ID: 0x00000002

Ring ID: 1.4dd

Quorate: Yes

Votequorum information

----------------------

Expected votes: 5

Highest expected: 5

Total votes: 5

Quorum: 3

Flags: Quorate Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

0x00000001 1 A,V,NMW 192.168.10.51

0x00000002 1 A,V,NMW 192.168.10.52 (local)

0x00000003 1 A,V,NMW 192.168.10.53

0x00000004 1 A,V,NMW 192.168.10.54

0x00000000 1 Qdevice

As a final note in my experience it is worthwhile to make sure that all of your cluster traffic is on a dedicated network. I actually set up a dedicated switch just for my cluster traffic to resolve some intermittent communication issues presumably correlating to high traffic on the network.

Feel free to reach out with the information on the contact page if you have any feedback. At some point in the future I'll enable commenting and revisit this post to add it.

-Andrew

topics linux, proxmox pve, windows